By David Kreda and Joshua Mandel

2014 will see wide-scale production and exchange of Consolidated CDA documents among healthcare providers. Indeed, live production of C-CDAs is already underway for anyone using a Meaningful Use 2014 certified EHR. C-CDA documents fuel several aspects of meaningful use, including transitions of care and patient-facing download and transmission.

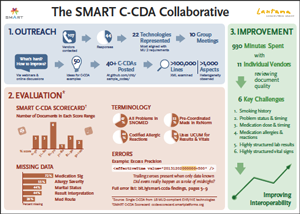

This impending deluge of documents represents a huge potential for interoperability, but it also presents substantial technical challenges. We forecast these challenges with unusual confidence because of what we learned during the SMART C-CDA Collaborative, an eight-month project conducted with 22 EHR and HIT vendors.

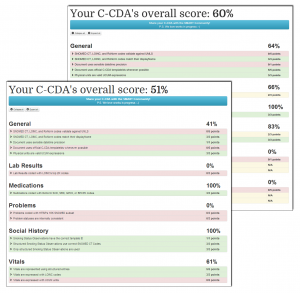

Our effort included analyzing vendor C-CDA documents, scoring them with a C-CDA scorecard tool we developed, and reviewing our results through customized one-on-one sessions with 11 of the vendors. The problems we uncovered arose for a number of reasons, including:

- material ambiguities in the C-CDA specification

- accidental misinterpretations of the C-CDA specification

- lack of authoritative “best practice” examples for C-CDA generation

- errors generated by certification itself, i.e., vendors are incentivised to produce documents that set off no warnings in the official validator (rather than documents that correctly convey the underlying data)

- data coding errors that reflect specific mapping and translation decisions that hospitals and providers may make independent of EHR vendors

Our full findings are set out in a detailed briefing we provided to the Office of the National Coordinator for Health IT (ONC).

The key takeaway from our effort is this: live exchange of C-CDA documents will omit relevant clinical information and increase the burden of manual review for provider organizations receiving the C-CDA documents.

While not all of these C-CDA difficulties are going to be fixable, many could be if they can be easily and consistently characterized. To achieve the latter, we have proposed a lightweight data quality reporting measure that, combined with automated, open-source tooling, would allow vendors and providers to measure and report on C-CDA document errors in an industry-consistent manner.

We crafted a proposal for how this might be done, the key section of which follows:

Proposal: Informatics Data Quality Metrics on Production C-CDAs

Our findings make a case for lightweight, automated reporting to assess the aggregate quality of clinical documents in real-world use. We recommend starting with an existing assessment tool such as Model-Driven Health Tools or the SMART C-CDA Scorecard. This tool would form the basis of an open-source data quality service that would:

- Run within a provider firewall or at a trusted cloud provider

- Automatically process documents posted by an EHR

- Assess each document to identify errors and yield a summary score

- Generate interval reports to summarize bulk data coverage and quality

- Expose reports through an information dashboard

- Facilitate MU attestation

We recognize that MU2 rules impose an administrative burden on providers, including a range of burdensome quality reporting requirements that are not always of direct clinical utility. Here we propose something distinctly different:

- ONC’s EHR Certification Program. We propose two laser-focused requirements:

a. Any C-CDA generated by an EHR as part of the certification testing process must be saved and shared with ONC, as a condition of certification.

b. In production, any certified EHR must be able to perform “fire-and-forget” routing of inbound and outbound C-CDAs, posting to a data quality service. - CMS’s MU Attestation Requirements. We propose a minimal, straightforward, copy/paste reporting requirement. The PHI-free report is directly generated by the data quality service and simply passed along to CMS for attestation.

These two steps constitute a minimal yet effective path for empowering providers to work with EHR vendors to assess, discuss, and ultimately improve data quality.

At a technical level, the following components are required to support the initiative:

- A data-quality service that leverages existing C-CDA validation technology

- EHRs that route inbound and outbound C-CDAs to the data-quality service

- A dashboard web application that generates simple reports enabling a Provider, Hospital, or IT staff to monitor C-CDA data quality and perform MU attestation

Our proposal describes a service that is technically doable (even “nearly done” if the SMART C-CDA Scorecard were made a web service). What is unknown is whether ONC and CMS will support this and whether providers adopt processes to use quality metrics to good effect. (See Thomas Redman’s recent Harvard Business Review article, Data’s Credibility Problem.)

To sum up: the case for data quality metrics is a strong one, and we encourage those who believe in its efficacy to voice support for it with ONC and CMS.

You must be logged in to post a comment.